Graphics by AJP Song Ji-yoon

Graphics by AJP Song Ji-yoon SEOUL, December 01 (AJP) - As Google’s tensor processing unit (TPU) rises as a formidable challenge to Nvidia’s near-monopoly in AI computing, the clearest signals of how the rivalry is unfolding may not be found in the chips themselves, but in the earnings and order books of Korea’s two memory giants: Samsung Electronics and SK hynix.

Samsung is said to have supplied more than 60 percent of the high-bandwidth memory (HBM) used in Google’s TPU designs through Broadcom, Google’s chip-design partner, and is expected to expand its share next year with sixth-generation HBM4.

Until the first half of this year, SK hynix had been the primary supplier of HBM3E chips for Google’s Ironwood TPU, but that dynamic may shift in the second half and into next year, analysts say.

Each TPU, typically equipped with six to eight HBM stacks, is also believed to come at up to 80 percent lower cost than Nvidia’s H100 GPU — a key reason hyperscalers are accelerating adoption.

A structural shift beneath the GPU–TPU rivalry

Behind the GPU-versus-TPU debate lies a broader transformation in how artificial intelligence infrastructure is being built.

According to Kyung Hee-kwon, a senior researcher at the Korea Industrial Research Institute, the global AI transition is increasingly being shaped not by chipmakers, but by big tech platforms designing computing systems around their own data and workloads.

“AI today is being led by platform companies — what many refer to as the Magnificent Seven,” Kyung said. “These firms are focused on agentic AI that enables large-scale automation, rather than fully autonomous human-like intelligence.”

For years, Nvidia’s GPUs were seen as indispensable for AI computation. But semiconductors, Kyung noted, are tools — not ends in themselves.

“If a chip delivers better power efficiency and performance for a specific purpose, there is no inherent reason it must be a GPU,” he said.

Google’s TPU, developed over several years and now deployed at scale in its data centers, exemplifies this shift. Unlike Nvidia, Google is not bound by the CUDA software ecosystem and instead operates a vertically integrated stack, allowing TPU accelerators to demonstrate efficiency gains in targeted workloads.

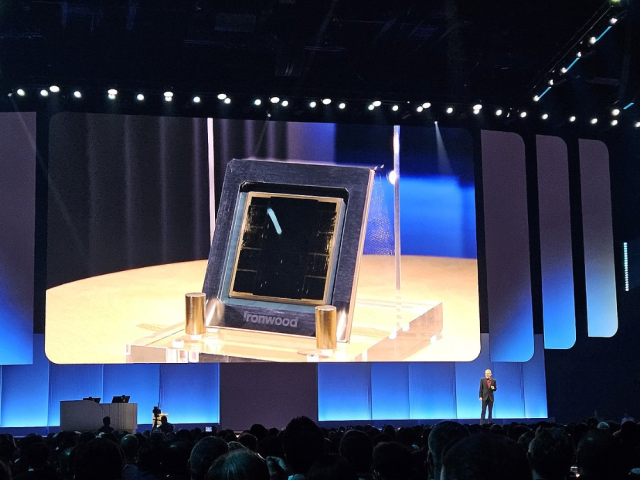

Google’s seventh-generation TPU, Ironwood, unveiled at Google Cloud Next 2025 in Las Vegas on April 12, 2025. Yonhap

Google’s seventh-generation TPU, Ironwood, unveiled at Google Cloud Next 2025 in Las Vegas on April 12, 2025. Yonhap Still, Kyung emphasized that TPUs and GPUs serve complementary roles.

“This is not about GPUs being replaced altogether. GPUs remain critical for training and general-purpose computing. What we are seeing is the emergence of alternative accelerators — especially where power efficiency and supply constraints matter.”

Supply bottlenecks push platforms toward custom silicon

With global foundry capacity stretched and delivery lead times extending into years, hyperscalers are increasingly unwilling to wait for GPUs.

“AI has become a technology tied to national competitiveness and security,” Kyung said. “If GPU supply cannot meet immediate demand, companies will seek viable alternatives that can be deployed now.”

This pressure has accelerated a wave of custom-silicon development far beyond Google, including Amazon’s Trainium, Microsoft’s Maia, and in-house AI accelerators at Tesla and other platforms.

Memory remains the constant, regardless of who wins

For Korea’s memory makers, the implications are structurally favorable regardless of which accelerator architecture gains ground.

“Whether computing shifts from GPUs to custom accelerators, Korea’s role fundamentally remains the same,” Kyung said. “High-bandwidth memory, advanced mobile DRAM and graphics memory are essential across all AI architectures. What changes is the route to market, not the underlying demand.”

This explains why Samsung Electronics and SK hynix sit at the center of both GPU- and TPU-driven ecosystems — and why their contrasting exposures offer a clearer lens into the AI race than any single chip announcement.

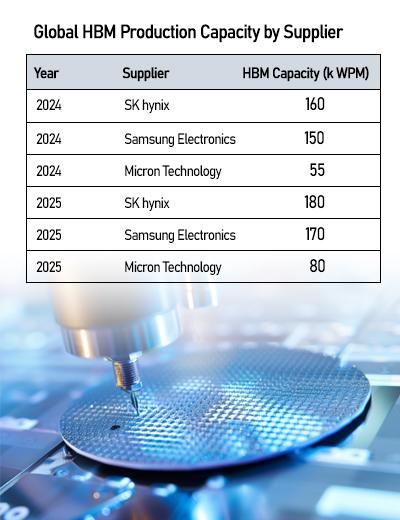

Graphics by AJP Song Ji-yoon

Graphics by AJP Song Ji-yoon According to Park Yu-ak, an analyst at Kiwoom Securities, Samsung’s growing presence in custom accelerators reflects its broader footprint across memory and foundry, while SK hynix continues to anchor the high-end GPU market through its HBM leadership.

Candice Kim 기자 candicekim1121@ajupress.com

![[포토] 예지원, 전통과 현대가 공존한 화보 공개](https://image.ajunews.com/content/image/2025/10/09/20251009182431778689.jpg)

![블랙핑크 제니, 최강매력! [포토]](https://file.sportsseoul.com/news/cms/2025/09/05/news-p.v1.20250905.ed1b2684d2d64e359332640e38dac841_P1.jpg)

![[포토] 발표하는 김정수 삼양식품 부회장](https://image.ajunews.com/content/image/2025/11/03/20251103114206916880.jpg)

![[포토]두산 안재석, 관중석 들썩이게 한 끝내기 2루타](https://file.sportsseoul.com/news/cms/2025/08/28/news-p.v1.20250828.1a1c4d0be7434f6b80434dced03368c0_P1.jpg)

![블랙핑크 제니, 매력이 넘쳐! [포토]](https://file.sportsseoul.com/news/cms/2025/09/05/news-p.v1.20250905.c5a971a36b494f9fb24aea8cccf6816f_P1.jpg)

![[포토] '삼양1963 런칭 쇼케이스'](https://image.ajunews.com/content/image/2025/11/03/20251103114008977281.jpg)

![[포토] 박지현 '순백의 여신'](http://www.segye.com/content/image/2025/09/05/20250905507414.jpg)

![[포토] 키스오브라이프 하늘 '완벽한 미모'](http://www.segye.com/content/image/2025/09/05/20250905504457.jpg)

![[포토] 아이들 소연 '매력적인 눈빛'](http://www.segye.com/content/image/2025/09/12/20250912508492.jpg)

![[포토] 국회 예결위 참석하는 김민석 총리](https://cphoto.asiae.co.kr/listimg_link.php?idx=2&no=2025110710410898931_1762479667.jpg)

![[포토] 박지현 '아름다운 미모'](http://www.segye.com/content/image/2025/11/19/20251119519369.jpg)

![[포토] 김고은 '단발 여신'](http://www.segye.com/content/image/2025/09/05/20250905507236.jpg)

![[작아진 호랑이③] 9위 추락 시 KBO 최초…승리의 여신 떠난 자리, KIA를 덮친 '우승 징크스'](http://www.sportsworldi.com/content/image/2025/09/04/20250904518238.jpg)

![[포토]첫 타석부터 안타 치는 LG 문성주](https://file.sportsseoul.com/news/cms/2025/09/02/news-p.v1.20250902.8962276ed11c468c90062ee85072fa38_P1.jpg)

![[포토] 알리익스프레스, 광군제 앞두고 팝업스토어 오픈](https://cphoto.asiae.co.kr/listimg_link.php?idx=2&no=2025110714160199219_1762492560.jpg)

![[포토] 키스오브라이프 쥴리 '단발 여신'](http://www.segye.com/content/image/2025/09/05/20250905504358.jpg)

![[포토]끝내기 안타의 기쁨을 만끽하는 두산 안재석](https://file.sportsseoul.com/news/cms/2025/08/28/news-p.v1.20250828.0df70b9fa54d4610990f1b34c08c6a63_P1.jpg)

![[포토] 한샘, '플래그십 부산센텀' 리뉴얼 오픈](https://image.ajunews.com/content/image/2025/10/31/20251031142544910604.jpg)

![[포토] 언론 현업단체, "시민피해구제 확대 찬성, 권력감시 약화 반대"](https://image.ajunews.com/content/image/2025/09/05/20250905123135571578.jpg)

![[포토] 김고은 '상연 생각에 눈물이 흘러'](http://www.segye.com/content/image/2025/09/05/20250905507613.jpg)

![[포토]두산 안재석, 연장 승부를 끝내는 2루타](https://file.sportsseoul.com/news/cms/2025/08/28/news-p.v1.20250828.b12bc405ed464d9db2c3d324c2491a1d_P1.jpg)

![[포토] 아홉 '신나는 컴백 무대'](http://www.segye.com/content/image/2025/11/04/20251104514134.jpg)

![[포토] TXT 범규 '반가운 손인사'](http://www.segye.com/content/image/2025/11/05/20251105518398.jpg)